Build Gym-Style interface¶

OpenAI Gym is widely used for reinforcement learning research. It is often desirable to have “Gym-style” environment interface to perform RL tasks.

In this tutorial, you will learn the following:

Build an robot ant with SAPIEN pure Python API

Set and get the states of objects

Pack current simulation and restore it later

Customize a simple Ant environment for training RL

Note

If you haven’t already done so, make sure you’ve completed the steps in A minimal example and SAPIEN assets and rendering section before starting tutorial of the robotics part.

Write a SAPIEN environment base¶

To build an Gym-style interface for control tasks, we may first write an virtual class base like Gym MujocoEnv. This will be a virtual class with many unimplemented member functions.

Environment base constructor¶

The major member variables can be divided into three groups:

For running simulation

For rendering

For apply control signal and get observation

Based on these principals, the constructor can be organized as follow:

98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 | class SapienControlEnv:

def __init__(self, frame_skip, timestep=0.01, gravity=(0, 0, -9.8)):

self.frame_skip = frame_skip

self.timestep = timestep

self._engine = sapien.Engine()

self._renderer = sapien.OptifuserRenderer()

self._engine.set_renderer(self._renderer)

self.sim = self._engine.create_scene(gravity=np.array(gravity))

self.sim.set_timestep(timestep)

self.viewer = self.viewer_setup()

self.model, self._init_state = self._load_model()

self._dof = self.model.dof

self._root = self.model.get_links()[0]

# Unlike MuJoCo MJCF, the actuator information is not defined in the xml file

# So you should define it explicitly in Python

from gym.spaces.box import Box

actuator_info = self._load_actuator()

self._actuator_name, _actuator_idx, low, high = list(zip(*actuator_info))

self._actuator_idx = np.array(_actuator_idx)

self.action_spec = Box(low=np.array(low), high=np.array(high), dtype=np.float32)

|

Create the scene and renderer as usual, load robot model and actuator information in order to take action.

Get observation for agent¶

To perform RL tasks, we also need to get the observation after each simulation step. The following function shows how to get the internal state of agent.

136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 | def get_qpos(self):

if not self._root:

root = [self._root.pose.p, self._root.pose.q]

else:

root = []

return np.concatenate(root + [self.model.get_qpos().ravel()])

def get_qvel(self):

if not self._root:

root = {self._root.velocity, self._root.angular_velocity}

else:

root = []

return np.concatenate(root + [self.model.get_qvel().ravel()])

def state_vector(self):

return np.concatenate([

self.get_qpos(),

self.get_qvel()

])

|

Note

Unlike MuJoCo, there is no free joint in SAPIEN. Thus, get_qpos() functions will not return the pose of root link. In order to capture this information, we need to do it explicitly.

Virtual functions to be implemented¶

There will be some model-specific functions to be implemented for child class.

164 165 166 167 168 169 170 171 172 173 174 175 176 177 | def _load_model(self) -> Tuple[sapien.Articulation, np.ndarray]:

raise NotImplementedError

def _load_actuator(self) -> List[Tuple[str, int, float, float]]:

raise NotImplementedError

def _get_obs(self) -> np.ndarray:

raise NotImplementedError

def reset_model(self) -> np.ndarray:

raise NotImplementedError

def viewer_setup(self, width=640, height=480) -> sapien.OptifuserController:

raise NotImplementedError

|

Build an ant robot purely by Python¶

Now we are going to implement an Ant environment. To do that, an articulated ant model is needed. Besides loading from a URDF file described here Load SAPIEN assets, an articulated object/robot can also be directly created with Python.

The general idea is to create an ArticulationBuilder for ant and several LinkBuilder for each articulation link as follow:

You may refer to The entire code or run an standalone example here for details.

This function will return an ArticulationBuilder filled with an ant model. This builder can be used more than once to build many ants as you like.

Ant environment class¶

Then implemented the Ant Environment as follow:

180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 | class SapienAntEnv(SapienControlEnv):

def __init__(self, frame_skip, timestep=0.01, gravity=(0, 0, -9.8)):

SapienControlEnv.__init__(self, frame_skip, timestep, gravity)

self.sim.add_ground(-1)

def step(self, a):

x_before = self._root.pose.p[0]

self.do_simulation(a, self.frame_skip)

x_after = self._root.pose.p[0]

forward_reward = (x_after - x_before) / self.dt

ctrl_cost = .5 * np.square(a).sum()

survive_reward = 1.0

reward = forward_reward - ctrl_cost + survive_reward

state = self.state_vector()

not_done = 0.2 <= state[2] <= 1.0

ob = self._get_obs()

return ob, reward, not not_done, dict(

reward_forward=forward_reward,

reward_ctrl=-ctrl_cost,

reward_survive=survive_reward)

def _load_model(self) -> Tuple[sapien.Articulation, np.ndarray]:

ant_builder = create_ant_builder(self.sim)

ant = ant_builder.build(fix_base=False)

# Step once to make sure everything goes smoothly

self.sim.step()

init_state = ant.pack()

return ant, init_state

def _load_actuator(self) -> List[Tuple[str, int, float, float]]:

assert self.model, "Ant model should be loaded before actuator"

actuator_info = []

idx = 0

for joint in self.model.get_joints():

if joint.get_dof() == 0:

continue

if joint.get_name().startswith("hip"):

actuator_info.append((joint.get_name(), idx, -2000, 2000))

elif joint.get_name().startswith("ankle"):

actuator_info.append((joint.get_name(), idx, -1000, 1000))

idx += joint.get_dof()

return actuator_info

def _get_obs(self) -> np.ndarray:

return self.state_vector()[2:]

def reset_model(self) -> np.ndarray:

self.model.unpack(self._init_state)

# Perturb the joint velocity a little bit

self.model.set_qvel(self.model.get_qvel() + np.random.rand(self.model.dof) * 0.1)

return self._get_obs()

def viewer_setup(self, width=640, height=480) -> sapien.OptifuserController:

self.sim.set_ambient_light([.4, .4, .4])

self.sim.set_shadow_light([1, -1, -1], [.5, .5, .5])

self.sim.add_point_light([2, 2, 2], [1, 1, 1])

self.sim.add_point_light([2, -2, 2], [1, 1, 1])

self.sim.add_point_light([-2, 0, 2], [1, 1, 1])

controller = sapien.OptifuserController(self._renderer)

controller.set_current_scene(self.sim)

controller.set_camera_position(0, 1, 10)

controller.set_camera_rotation(0, -1.5)

controller.show_window()

return controller

|

The highlighted lines show how to save the internal simulation states and load it later. Note that this function is only for an single model but not entire simulation scene.

Use Ant environment¶

Now we can use our SapienAntEnv as Gym.

249 250 251 252 253 254 255 256 257 | if __name__ == '__main__':

env = SapienAntEnv(5, timestep=1 / 100)

observation = env.reset()

for i in range(1000):

env.render()

action = env.action_spec.sample()

observation, reward, done, info = env.step(action)

env.close()

|

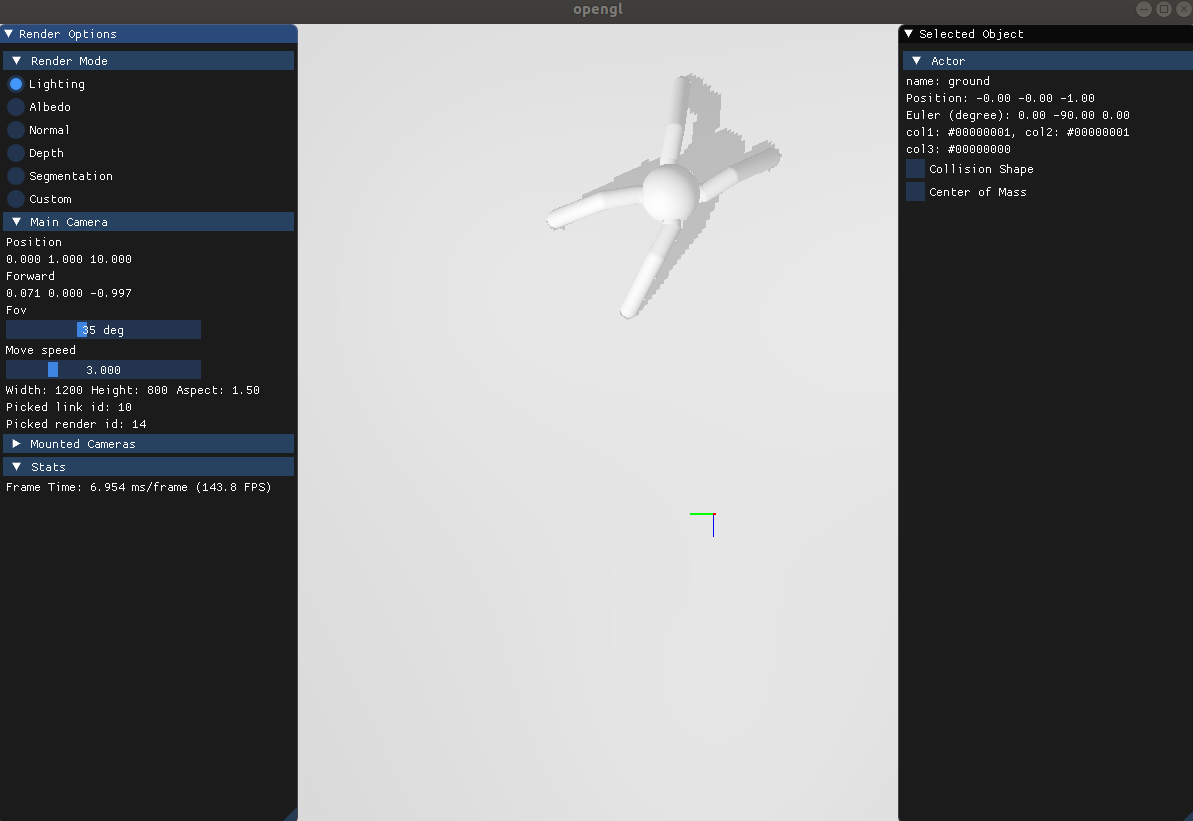

You will see something like that: